EBAZ4205というFPGAボードでJTAGプログラマ(互換品)を使う

EBAZ4205というFPGAボードの概要

下記のリンクを参考にしました。

JTAGプログラマ(互換品)の使い方

Ubuntu 20.04での使い方を書きます。 vivadoでJTAGプログラマ(互換品)のドライバを通すために、

sudo ./Vivado/2021.1/data/xicom/cable_drivers/lin64/install_script/install_drivers/install_drivers

をターミナルで実行する。「Sccessful」の表示が出ればOK。

ここで、念の為、vivadoを閉じてPCを再起動した。

再びvivadoを開き、左ペインの中の「Open Hardware Manager」->「Open Target」->「Auto Connect」をクリックする。

すると、vivadoでJTAGプログラマが自動認識された。そのときの画面がこちら。

Pythonを使ったWordpressへの自動投稿

1. インストール

pip install python-wordpress-xmlrpc

2. コード

指定するURLは、https://xxxxx.com/xmlrpc.php とする。

(/xmlrpc.php を通常のURLの後ろにつける)

from wordpress_xmlrpc import Client, WordPressPost from wordpress_xmlrpc.methods.posts import GetPosts, NewPost from wordpress_xmlrpc.methods.users import GetUserInfo # 投稿するサイトのURLと、アカウントとパスワードを入力 wp = Client('https://xxxxx.com/xmlrpc.php', 'username', 'password') post = WordPressPost() # 投稿する記事のタイトルを設定 post.title = 'PythonでのWordPress投稿テスト' #「下書き」として投げる(「公開」したい場合は、'publish') post.post_status = 'draft' # 記事の本文を設定 post.content = 'PythonからWordPressへ自動投稿しました。' post.terms_names = { 'post_tag': ['Python', 'WordPress'], # タグの設定 'category': ['WordPress', 'Python'] # カテゴリの設定 } wp.call(NewPost(post))

3. 動作結果

4. 参考サイト

Laravel + Guzzel でフォームから画像をアップロードしてPythonの画像処理APIを叩く

1. はじめに

下記の記事の応用として、フォームから画像をアップロードできるようにし、 Pythonの画像処理APIを叩く記事です。

2. コントローラーの作成

下記コマンドでコントローラーを新規作成します。

php artisan make:controller UploadController

コントローラーの中身は下記のコードにします。

<?php namespace App\Http\Controllers; use Illuminate\Http\Request; use GuzzleHttp\Client; class UploadController extends Controller { public function index(){ return view('upload'); } public function upload(Request $request) { // 画像を保存 $file_name = $request->file('image')->store('public'); $file_name = explode('/', $file_name, )[1]; var_dump($file_name); // APIを叩く準備 $client = new Client([ 'headers' => [ 'Content-Type' => 'application/json' ] ]); // base64で画像を読み込んでエンコード $input_data = base64_encode(file_get_contents('storage/' . $file_name)); $resp = $client->post('http://localhost:5000/image', ['body' => json_encode( [ [ 'id' => 1, 'Image' => $input_data ] ] )] ); $resp_json = json_decode($resp->getBody(),true); #var_dump($resp_json); $id = $resp_json[0]['id']; $result = $resp_json[0]['result']; return view('ai-image', [ 'id' => $id, 'result' => $result ]); } }

3. ルーティングの設定

ルーティングの設定をします。

<?php use Illuminate\Support\Facades\Route; use App\Http\Controllers\UploadController; /* |-------------------------------------------------------------------------- | Web Routes |-------------------------------------------------------------------------- | | Here is where you can register web routes for your application. These | routes are loaded by the RouteServiceProvider within a group which | contains the "web" middleware group. Now create something great! | */ Route::get('/', function () { return view('welcome'); }); Route::get('/upload', [UploadController::class, 'index']); Route::post('/upload', [UploadController::class, 'upload']);

4. viewファイルの設定

<!DOCTYPE html> <html> <body> <form method="POST" action="/upload" enctype="multipart/form-data"> @csrf <input type="file" name="image"> <button>アップロード</button> </form> </body> </html>

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta http-equiv="X-UA-Compatible" content="IE=edge"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>AI Image Test</title> </head> <body> <h1>id: {{ $id }}</h1> <img src="data:image/png;base64,{{ $result }}" /> </body> </html>

upload.blade.php は、このような感じの表示になります。

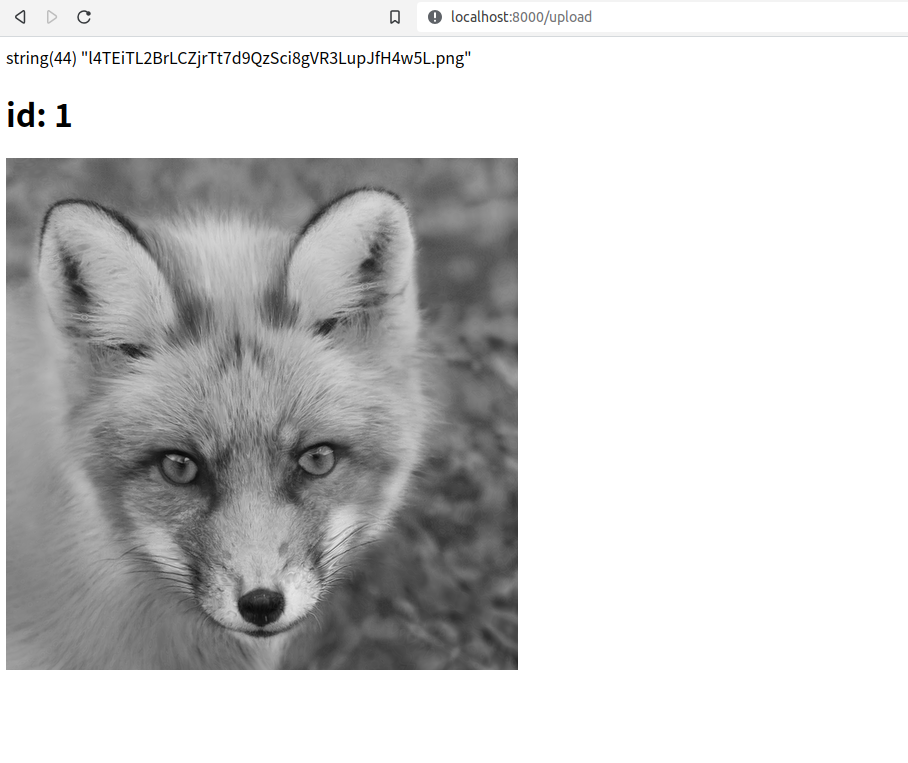

5. 動作結果

Pythonのflaskサーバーを起動します。

python3 app.py

Laravelのサーバーを起動します。

php artisan serve --host=localhost --port=8000

http://localhost:8000/upload にアクセスし、 画像をアップロードした結果が以下です。 グレースケール化された画像がちゃんとPythonの画像処理APIを介してブラウザに表示されました!

Laravel + Guzzel でPythonの画像処理APIを叩く【PHPとPythonとの連携】

1. はじめに

LaravelでGuzzelを使って、Pythonによる画像処理APIを叩く記事です。

はじめに、Laravelのプロジェクトを作ります。

composer create-project laravel/laravel sample

必要に応じて、下記コマンドを実行します。

composer install

次に、Guzzelをcomposerコマンドを使ってインストールします。

composer require guzzlehttp/guzzle

2. 画像処理APIをPython + flask で準備する

下記の記事を参考に、グレースケール画像を返すAPIを準備します。

コードを再掲します。

from flask import Flask, jsonify, request import cv2 import numpy as np import base64 import json app = Flask(__name__) @app.route("/image", methods=["POST"]) def image_gray_convert(): response = [] params = json.loads(request.data.decode('utf-8')) for recieve_json in params: # Imageをデコード img_stream = base64.b64decode(recieve_json['Image']) # 配列に変換 img_array = np.asarray(bytearray(img_stream), dtype=np.uint8) # opencv でグレースケール化(引数 = 0) img_gray = cv2.imdecode(img_array, 0) # 変換結果を保存 cv2.imwrite('./output/result.png', img_gray) # 保存したファイルに対してエンコード with open('./output/result.png', "rb") as f: img_base64 = base64.b64encode(f.read()).decode('utf-8') response.append({'id': recieve_json['id'], 'result' : img_base64}) return jsonify(response) if __name__ == '__main__': app.run(host='localhost', port=5000, debug=True)

これで、http://localhost:5000/image にてグレースケール画像を返すAPIが作成できました。

3. Laravel側の準備

コントローラーを下記コマンドで作成します。

php artisan make:controller AiController

入力画像ファイルの置き場所として、storageのシンボリックリンクを張ります。

php artisan storage:link

入力画像ファイルを ./storage/app/public/test.jpg に置きます。

作成したコントローラーを下記に書き換えます。

<?php namespace App\Http\Controllers; use GuzzleHttp\Client; class AiController extends Controller { public function index(){ $client = new Client([ 'headers' => [ 'Content-Type' => 'application/json' ] ]); $file_name = 'storage/test.png'; $input_data = base64_encode(file_get_contents($file_name)); $resp = $client->post('http://localhost:5000/image', ['body' => json_encode( [ [ 'id' => 1, 'Image' => $input_data ] ] )] ); $resp_json = json_decode($resp->getBody(),true); #var_dump($resp_json); $id = $resp_json[0]['id']; $result = $resp_json[0]['result']; return view('guzzel-test', [ 'id' => $id, 'result' => $result ]); } }

次に、ルーティングの設定です。

<?php use Illuminate\Support\Facades\Route; use App\Http\Controllers\AiController; /* |-------------------------------------------------------------------------- | Web Routes |-------------------------------------------------------------------------- | | Here is where you can register web routes for your application. These | routes are loaded by the RouteServiceProvider within a group which | contains the "web" middleware group. Now create something great! | */ Route::get('/', function () { return view('welcome'); }); Route::get('/ai', [AiController::class, 'index']);

次に、viewのコードを下記にします。

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta http-equiv="X-UA-Compatible" content="IE=edge"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>AI Image Test</title> </head> <body> <h1>id: {{ $id }}</h1> <img src="data:storage/image.png;base64,{{ $result }}" /> </body> </html>

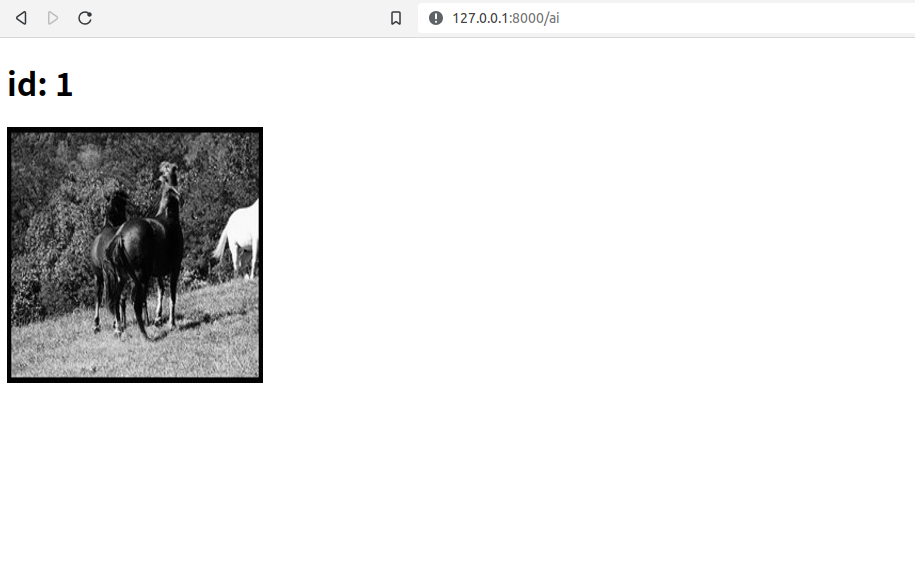

4. 動作結果

python3 app.py

Laravelにおいてサーバーを起動します。

php artisan serve --host=localhost --port=8000

http://127.0.0.1:8000/ai にアクセスした結果が以下です。

無事にグレースケール化された画像がブラウザ上に表示されました。

flaskで画像をグレースケール化するAPIを立てる方法

1. はじめに

flaskを使って、POSTリクエストとして投げた画像データを、グレースケール化した画像データとして返すAPIを作る記事です。

2. インストール

仮想環境の作成とactivate

python3 -m venv .venv source .venv/bin/activate

必要なライブラリのインストール。

pip install flask opencv-python requests

3. 画像をグレースケール化するAPIのコード

output のフォルダをあらかじめ作っておく。

from flask import Flask, jsonify, request import cv2 import numpy as np import base64 import json app = Flask(__name__) @app.route("/image", methods=["POST"]) def image_gray_convert(): response = [] params = json.loads(request.data.decode('utf-8')) for recieve_json in params: # Imageをデコード img_stream = base64.b64decode(recieve_json['Image']) # 配列に変換 img_array = np.asarray(bytearray(img_stream), dtype=np.uint8) # opencvでグレースケール化 img_gray = cv2.imdecode(img_array, 0) # 変換結果を保存 cv2.imwrite('./output/result.png', img_gray) # 保存したファイルに対してエンコード with open('./output/result.png', "rb") as f: img_base64 = base64.b64encode(f.read()).decode('utf-8') response.append({'id': recieve_json['id'], 'result' : img_base64}) return jsonify(response) if __name__ == '__main__': app.run(host='localhost', port=5000, debug=True)

下記でサーバー起動を実行した状態にしておく。

python3 app.py

4. 画像のPOSTリクエストを投げるコード

input フォルダをあらかじめ作っておく。

その中に以下の画像データ(0.jpg)を入れておいた。

import requests import json import base64 with open('./input/0.jpg', "rb") as f: img_base64 = base64.b64encode(f.read()).decode('utf-8') url = "http://localhost:5000/image" #JSON形式のデータ json_data = [ { "id": 0, "Image": img_base64 } ] #POSTリクエストを投げる response = requests.post(url, data=json.dumps(json_data)) response_json = response.json()[0]['result'] print(response_json) # Imageをデコード img_stream = base64.b64decode(response_json) # 配列に変換 img_array = np.asarray(bytearray(img_stream), dtype=np.uint8) img_gray = cv2.imdecode(img_array, 0) # 変換結果を保存 cv2.imwrite('./output_recieve/result.png', img_gray)

コードを実行してみる。

python3 post.py

実行がうまく行くと、output フォルダ内にグレースケール化された result.pngが生成される。

加えて、base64形式のグレースケール化された画像データがjsonで返ってくる。

output_recieve フォルダ内にjsonをパースして得た画像データが保存される。

keras-rl2 + OpenAI gym で3リンクロボットアームの手先位置制御の強化学習(DQN学習)・シミュレータの作成

1. はじめに

keras-rl2 と OpenAI gymのPythonライブラリを使って強化学習(DQN学習)を行い、3リンクロボットアームの手先位置制御シミュレータの作成を試みる記事です。

(本記事の開発環境:Ubuntu 20.04.6 LTS, Python3.8)

2. インストール

まずはじめに、仮想環境を作る。

python3 -m venv .venv

仮想環境をactivateする。

source .venv/bin/activate

keras-rl2 をインストールする。

git clone https://github.com/wau/keras-rl2.git cd keras-rl

本記事で追加の依存関係をインストールする。

tensorflowについては、新しすぎるバージョンだと動かないため、バージョン指定してインストール。

gymはメンテナンスが終了している模様だが、バージョン 0.26.2で指定。

(パッケージがない等のエラーが出たものについても、再度インストールしている)

pip install keras-rl2==1.0.5 tensorflow==2.3.0 gym==0.26.2 pip install protobuf==3.20 pip install pygame scipy

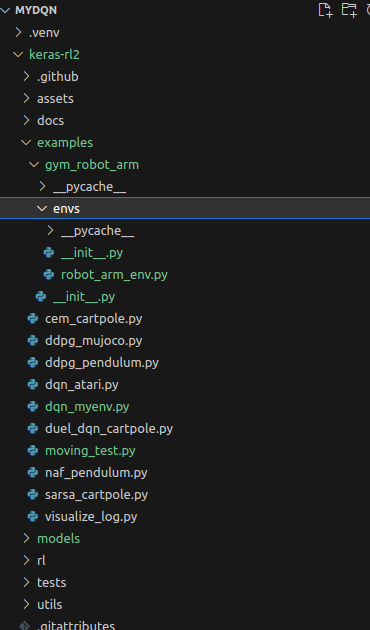

3. gym のenvを作成する

次の3つのスクリプトをファイル名、ディレクトリ構成に気をつけて作成する。

from gym_robot_arm.envs.robot_arm_env import RobotArmEnvV0

import gym import math import random import pygame import numpy as np from gym import utils from gym import error, spaces from gym.utils import seeding from scipy.spatial.distance import euclidean import os import sys class RobotArmEnvV0(gym.Env): metadata = {'render.modes': ['human']} def __init__(self): self.set_window_size([650,650]) self.set_link_properties([121,84,97]) self.set_increment_rate(0.01) self.target_pos = self.generate_random_pos() self.action = {0: "HOLD", 1: "INC_J1", 2: "DEC_J1", 3: "INC_J2", 4: "DEC_J2", 5: "INC_J3", 6: "DEC_J3", 7: "INC_J1_J2", 8: "INC_J1_J3", 9: "INC_J2_J3", 10: "DEC_J1_J2", 11: "DEC_J1_J3", 12: "DEC_J2_J3", 13: "INC_J1_J2_J3", 14: "DEC_J1_J2_J3" } self.observation_space = spaces.Box(np.finfo(np.float32).min, np.finfo(np.float32).max, shape=(5,), dtype=np.float32) self.action_space = spaces.Discrete(len(self.action)) self.current_error = -math.inf self.seed() self.viewer = False self.i = 0 self.save_img = False def set_link_properties(self, links): self.links = links self.n_links = len(self.links) self.min_theta = math.radians(0) self.max_theta = math.radians(90) self.theta = self.generate_random_angle() self.max_length = sum(self.links) def set_increment_rate(self, rate): self.rate = rate def set_window_size(self, window_size): self.window_size = window_size self.centre_window = [window_size[0]//2, window_size[1]//2] def rotate_z(self, theta): rz = np.array([[np.cos(theta), - np.sin(theta), 0, 0], [np.sin(theta), np.cos(theta), 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]]) return rz def translate(self, dx, dy, dz): t = np.array([[1, 0, 0, dx], [0, 1, 0, dy], [0, 0, 1, dz], [0, 0, 0, 1]]) return t def forward_kinematics(self, theta): P = [] P.append(np.eye(4)) for i in range(0, self.n_links): R = self.rotate_z(theta[i]) T = self.translate(self.links[i], 0, 0) P.append(P[-1].dot(R).dot(T)) return P def inverse_theta(self, theta): new_theta = theta.copy() for i in range(theta.shape[0]): new_theta[i] = -1*theta[i] return new_theta def draw_arm(self, theta): LINK_COLOR = (255, 255, 255) JOINT_COLOR = (0, 0, 0) TIP_COLOR = (0, 0, 255) theta = self.inverse_theta(theta) P = self.forward_kinematics(theta) origin = np.eye(4) origin_to_base = self.translate(self.centre_window[0],self.centre_window[1],0) base = origin.dot(origin_to_base) F_prev = base.copy() for i in range(1, len(P)): F_next = base.dot(P[i]) pygame.draw.line(self.screen, LINK_COLOR, (int(F_prev[0,3]), int(F_prev[1,3])), (int(F_next[0,3]), int(F_next[1,3])), 5) pygame.draw.circle(self.screen, JOINT_COLOR, (int(F_prev[0,3]), int(F_prev[1,3])), 10) F_prev = F_next.copy() pygame.draw.circle(self.screen, TIP_COLOR, (int(F_next[0,3]), int(F_next[1,3])), 8) def draw_target(self): TARGET_COLOR = (255, 0, 0) origin = np.eye(4) origin_to_base = self.translate(self.centre_window[0], self.centre_window[1], 0) base = origin.dot(origin_to_base) base_to_target = self.translate(self.target_pos[0], -self.target_pos[1], 0) target = base.dot(base_to_target) pygame.draw.circle(self.screen, TARGET_COLOR, (int(target[0,3]),int(target[1,3])), 12) def generate_random_angle(self): theta = np.zeros(self.n_links) theta[0] = random.uniform(self.min_theta, self.max_theta) theta[1] = random.uniform(self.min_theta, self.max_theta) theta[2] = random.uniform(self.min_theta, self.max_theta) return theta def generate_random_pos(self): theta = self.generate_random_angle() P = self.forward_kinematics(theta) pos = np.array([P[-1][0,3], P[-1][1,3]]) return pos def seed(self, seed=None): self.np_random, seed = seeding.np_random(seed) return [seed] @staticmethod def normalize_angle(angle): return math.atan2(math.sin(angle), math.cos(angle)) def step(self, action): if self.action[action] == "INC_J1": self.theta[0] += self.rate elif self.action[action] == "DEC_J1": self.theta[0] -= self.rate elif self.action[action] == "INC_J2": self.theta[1] += self.rate elif self.action[action] == "DEC_J2": self.theta[1] -= self.rate elif self.action[action] == "INC_J3": self.theta[2] += self.rate elif self.action[action] == "DEC_J3": self.theta[2] -= self.rate elif self.action[action] == "INC_J1_J2": self.theta[0] += self.rate self.theta[1] += self.rate elif self.action[action] == "INC_J1_J3": self.theta[0] += self.rate self.theta[2] += self.rate elif self.action[action] == "INC_J2_J3": self.theta[1] += self.rate self.theta[2] += self.rate elif self.action[action] == "DEC_J1_J2": self.theta[0] -= self.rate self.theta[1] -= self.rate elif self.action[action] == "DEC_J1_J3": self.theta[0] -= self.rate self.theta[2] -= self.rate elif self.action[action] == "DEC_J2_J3": self.theta[1] -= self.rate self.theta[2] -= self.rate elif self.action[action] == "INC_J1_J2_J3": self.theta[0] += self.rate self.theta[1] += self.rate self.theta[2] += self.rate elif self.action[action] == "DEC_J1_J2_J3": self.theta[0] -= self.rate self.theta[1] -= self.rate self.theta[2] -= self.rate self.theta[0] = np.clip(self.theta[0], self.min_theta, self.max_theta) self.theta[1] = np.clip(self.theta[1], self.min_theta, self.max_theta) self.theta[2] = np.clip(self.theta[2], self.min_theta, self.max_theta) self.theta[0] = self.normalize_angle(self.theta[0]) self.theta[1] = self.normalize_angle(self.theta[1]) self.theta[2] = self.normalize_angle(self.theta[2]) # Calc reward P = self.forward_kinematics(self.theta) #print('P = ', P) tip_pos = [P[-1][0,3], P[-1][1,3]] distance_error = euclidean(self.target_pos, tip_pos) #2点間の距離を算出 reward = 0 if distance_error >= self.current_error: reward = -1 epsilon = 5 if (- epsilon < distance_error and distance_error < epsilon): reward = 1 self.current_error = distance_error self.current_score += reward if self.current_score == -5 or self.current_score == 5: done = True else: done = False observation = np.hstack((self.target_pos, self.theta)) info = { 'distance_error': distance_error, 'target_position': self.target_pos, 'current_position': tip_pos, 'current_score': self.current_score } return observation, reward, done, info def reset(self): self.target_pos = self.generate_random_pos() self.current_score = 0 observation = np.hstack((self.target_pos, self.theta)) return observation def render(self, mode='human'): SCREEN_COLOR = (52, 152, 52) if self.viewer == False: if not os.path.isdir('./output') and self.save_img: os.makedirs('./output') pygame.init() pygame.display.set_caption("RobotArm-Env") self.screen = pygame.display.set_mode(self.window_size, ) self.clock = pygame.time.Clock() self.viewer = True # 同一ウィンドウ内に描画するため, 状態を変化させる self.screen.fill(SCREEN_COLOR) self.draw_target() self.draw_arm(self.theta) self.clock.tick(60) pygame.display.flip() # 画面の更新 if self.save_img: self.i += 1 pygame.image.save(self.screen, f'./output/{str(self.i).zfill(5)}.png') # イベント処理 for event in pygame.event.get(): # イベントを取得 if event.type == 'QUIT': # 閉じるボタンが押されたら pygame.quit() # 全てのpygameモジュールの初期化を解除 sys.exit() # 終了 def close(self): if self.viewer != None: pygame.quit()

from gym.envs.registration import register register( id='robot-arm-v0', entry_point='gym_robot_arm.envs:RobotArmEnvV0', )

4. keras-rl2の実行スクリプトを作成する

import numpy as np import gym from gym_robot_arm.envs.robot_arm_env import RobotArmEnvV0 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Activation, Flatten from tensorflow.keras.optimizers import Adam from rl.agents.dqn import DQNAgent from rl.policy import BoltzmannQPolicy from rl.memory import SequentialMemory ENV_NAME = 'robot-arm-v0' # Get the environment and extract the number of actions. env = gym.make(ENV_NAME) np.random.seed(123) env.seed(123) nb_actions = env.action_space.n # Next, we build a very simple model. model = Sequential() model.add(Flatten(input_shape=(1,) + env.observation_space.shape)) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(nb_actions)) model.add(Activation('linear')) print(model.summary()) # Finally, we configure and compile our agent. You can use every built-in tensorflow.keras optimizer and # even the metrics! memory = SequentialMemory(limit=50000, window_length=1) policy = BoltzmannQPolicy() dqn = DQNAgent(model=model, nb_actions=nb_actions, memory=memory, nb_steps_warmup=10, target_model_update=1e-2, policy=policy) dqn.compile(Adam(learning_rate=1e-3), metrics=['mae']) # Okay, now it's time to learn something! We visualize the training here for show, but this # slows down training quite a lot. You can always safely abort the training prematurely using # Ctrl + C. dqn.fit(env, nb_steps=50000, visualize=True, verbose=2) # After training is done, we save the final weights. dqn.save_weights(f'./models/dqn_{ENV_NAME}_weights.h5f', overwrite=True) # Finally, evaluate our algorithm for 5 episodes. dqn.test(env, nb_episodes=5, visualize=True)

5. 動作確認

ここで、実行してみる。

python3 examples/dqn_myenv.py

すると、下記画像のようなエラーが発生した。

エラーメッセージに従い、少しだけソースを編集する。 ~/rl/core.py の181行目を書き換えた。

- if not np.isreal(value): + if not np.array([np.isreal(value)]).all():

あと、~/rl/core.py の355行目も同様のエラーが出たため、書き換える。

- if not np.isreal(value): + if not np.array([np.isreal(value)]).all():

気を取り直して再び実行。

6. 重みファイルの生成

ちなみに、設定した50000ステップの学習が完了すると、 重みファイル(.h5f)に学習結果の重みデータが生成される。 これは後から呼び出し可能です。

7. 重みファイルを呼び出しての動作確認

動作確認スクリプトは下記。

python3 examples/moving_test.py

import numpy as np import gym from gym_robot_arm.envs.robot_arm_env import RobotArmEnvV0 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Activation, Flatten from tensorflow.keras.optimizers import Adam from rl.agents.dqn import DQNAgent from rl.policy import BoltzmannQPolicy from rl.memory import SequentialMemory ENV_NAME = 'robot-arm-v0' # Get the environment and extract the number of actions. env = gym.make(ENV_NAME) np.random.seed(123) env.seed(123) nb_actions = env.action_space.n # Next, we build a very simple model. model = Sequential() model.add(Flatten(input_shape=(1,) + env.observation_space.shape)) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(32)) model.add(Activation('relu')) model.add(Dense(nb_actions)) model.add(Activation('linear')) print(model.summary()) # Finally, we configure and compile our agent. You can use every built-in tensorflow.keras optimizer and # even the metrics! memory = SequentialMemory(limit=50000, window_length=1) policy = BoltzmannQPolicy() dqn = DQNAgent(model=model, nb_actions=nb_actions, memory=memory, nb_steps_warmup=10, target_model_update=1e-2, policy=policy) dqn.compile(Adam(learning_rate=1e-3), metrics=['mae']) # After training is done, we save the final weights. dqn.load_weights(f'./models/dqn_{ENV_NAME}_weights.h5f') # Finally, evaluate our algorithm for 500 episodes. dqn.test(env, nb_episodes=500, visualize=True) #500回の動作テスト

8. 参考サイト

タンパク質立体構造予測をWeb上で実行できるものを開発しました

タンパク質立体構造予測をWeb上で実行できるものを開発しました。 名前は、gfufoldです。(予測はESMFoldに準じています)

アミノ酸配列を入力して「START」ボタンを押すだけで、 タンパク質立体構造をWebGLによってぐりぐりさわりながら確認できます。

予測結果は、PDB, CIFのファイル形式で、 また入力の配列データもテキスト(.txt)で保存することができます。

お問い合わせ等は下記メールアドレスまでよろしくお願いいたします。

uuktuv@gmail.com

※ テスト時のアミノ酸配列として、PETaseのアミノ酸配列を入力してみてください。

MGSSHHHHHHSSGLVPRGSHMRGPNPTAASLEASAGPFTVRSFTVSRPSGYGAGTVYYPTNAGGTVGAIAIVPGYTARQSSIKWWGPRLASHGFVVITIDTNSTLDQPSSRSSQQMAALRQVASLNGTSSSPIYGKVDTARMGVMGWSMGGGGSLISAANNPSLKAAAPQAPWDSSTNFSSVTVPTLIFACENDSIAPVNSSALPIYDSMSRNAKQFLEINGGSHSCANSGNSNQALIGKKGVAWMKRFMDNDTRYSTFACENPNSTRVSDFRTANCSLEDPAANKARKEAELAAATAEQ

20XX年 宇宙の旅

20xxuchuunotabi.azurewebsites.net

サイト開設しました。 ランダムに選ばれた宇宙の画像をぼおっと眺められます。

Forest of Papers(学術論文検索サイト)の開設

Forest of Papers(学術論文検索サイト)を開設しました。(2022.12.08時点プレビュー版)

forestofpapers.azurewebsites.net

Forest of Papersは、世界の2億以上の科学論文から目的の論文を探し出す、新たな論文検索システムです。 科学論文とその著者は、ある論文から別の論文への引用によって結び付けられています。 論文検索の仕組みには、Semantic Scholarを用いています。

のぞいて使ってみてください。 よろしくお願いいたします🇺🇦

AWS(EC2)-Ubuntu18.04 + flask + uWSGI + nginxでWebサーバー構築・mecabの走らせ方

1.環境作成

①まずは普通にAWS(EC2)を使って、Ubuntu18.04環境を作る。※新規作成時、キーペア(秘密鍵)の作成有無を聞かれるので作っておく。

②Ubuntu18.04が載ったAWS(EC2)が作れたので、適宜自分のPCのwindowsなら「コマンドプロンプト」や「Tera Term」などからssh接続する。ファイル送受信などがGUIで出来ることも考えれば、自分としては「Tera Term」が楽で吉かと。

「OK」を押し、次の画面で①で作ったキーペア(秘密鍵)を聞かれるので、キーペア(.pem)のファイルのディレクトリを選択して、AWS-Ubuntu18.04環境にログインする。

③ログイン出来たら、とりあえずは初めての更新作業として、

$ sudo apt update $ sudo apt upgrade

※何か聞かれたら「y」でyesする。

④諸々をインストールする。pipと仮想環境作成のためのvenvとdev。

$ sudo apt install python3-pip python3-venv python3-dev

⑤さらに今回の肝のひとつであるnginxをインストールする。

sudo apt install nginx

⑥"myapp"フォルダ内にvenvで仮想環境(env)を作成後、それをアクティブにした後、pipでuwsgiとflaskをインストール。さらに、今回はmecabをインストールする。

$ mkdir ~/myapp; cd ~/myapp $ python3 -m venv env $ source env/bin/activate (env) $ pip install flask (env) $ pip install uwsgi (env) $ sudo apt install aptitude (env) $ sudo aptitude install mecab libmecab-dev mecab-ipadic-utf8 git make curl xz-utils file -y (env) $ pip install mecab-python3==0.7

2.必要となる各種ファイルの作成

①メインの動きを実装したflaskアプリを server.py として、以下のディレクトリに作成する。vimエディタ等々を使って作成すればよい。

~/myapp/server.py

# -*- coding: utf-8 -*- from flask import Flask , render_template import sys sys.path.append("/home/ubuntu/env/lib/python3.6/site-packages") import MeCab app = Flask(__name__) @app.route("/") def hello(): txt = 'すもももももももものうち' m = MeCab.Tagger('-Owakati') result = m.parse(txt) return render_template('index.html', input_from_python = result) if __name__ == "__main__": app.run(host='0.0.0.0')

ここで、mkdirで"templates"というflaskが参照するhtmlファイル用置き場のフォルダを作る。その中に"index.html"を新規作成し配置。

(env) $ cd myapp (env) $ mkdir templates (env) $ cd templates (env) $ nano index.html

~/myapp/templates/index.html

<!DOCTYPE html> <html> <head> <meta charset="UTF-8"> <title>This is sample site</title> </head> <body> <h1> This is test-site! </h1> <script type="text/javascript"> data = {{ input_from_python | tojson }}; document.write(data); </script> </body> </html>

同様に、wsgi.pyを作成。

~/myapp/wsgi.py

from server import app if __name__ == "__main__": app.run()

②uwsgiの設定ファイル server.ini を作成する。

~/myapp/server.ini

[uwsgi] module = wsgi:app master = true processes = 1 socket = server.sock chmod-socket = 666 vacuum = true die-on-term = true touch-reload = server.py

③連携させるためのファイル myapp.service を作成する。コマンドは、

$ sudo nano /etc/systemd/system/myapp.service

とし、内容を以下のとおりにする。

/etc/systemd/system/myapp.service

[Unit] Description=uWSGI instance to serve myapp After=network.target [Service] User=ubuntu Group=www-data WorkingDirectory=/home/ubuntu/myapp Environment="PATH=/home/ubuntu/myapp/env/bin" ExecStart=/home/ubuntu/myapp/env/bin/uwsgi --ini server.ini [Install] WantedBy=multi-user.target

3.サービスの起動

$ sudo systemctl start myapp $ sudo systemctl enable myapp # 自動起動 $ sudo systemctl status myapp # 確認 $ sudo systemctl restart myapp #再起動したいときはこれ

4.プロキシ設定

プロキシ設定のためのファイル"myapp"を作成する。コマンドはこれ。

sudo nano /etc/nginx/sites-available/myapp

以下をファイル内に記述する。

server {

listen 80;

server_name $hostname 0.0.0.0;

location / {

include uwsgi_params;

uwsgi_pass unix:/home/ubuntu/myapp/server.sock;

}

}

そして、以下を実行する

$ sudo ln -s /etc/nginx/sites-available/myapp /etc/nginx/sites-enabled $ sudo nginx -t # 確認 $ sudo systemctl restart nginx # 再起動

5.動作確認

curl で試しにアクセスしてみる。

$ curl "http://0.0.0.0"

図のような応答が返ってこればOK!!

今回は 0.0.0.0としたが、使用しているAWS(EC2)のパブリック IPv4 アドレス(x.x.x.x)を指定してそのような設定に各種変更してやれば、世界中どこからでもブラウザでアクセスできるのは勿論。以下の図がその時の例。というわけで、取り合えず完了。